Probability and other paradoxes

by Al Sims

This is a collection of relatively simple probability problems and probability paradoxes, the solution of which looks easy at first thought but either it is different than what one logically expects or, it is actually correct but it is proven wrong by presenting sound logical arguments, creating thus a paradox. They can be all called with the same name: brain teasers.

A. Probability problems

- The Monty Hall problem

- Variations of the Monty Hall problem

- Two envelopes problem

- The three coins problem

B. Probability paradoxes

- Bertrand's Box paradox

- St. Petersburg paradox

- Necktie paradox

- Boy or Girl paradox

- The Birthday paradox

- Streak of heads paradox

C. Other paradoxes

- Crocodile dilemma

- The card paradox

- Lying paradoxes

- The treachery of images

- Socratic paradox

- Unexpected test paradox

- The Sorites' paradox

- The paradox of the court

- Always-winning roulette method

C. Short, funny paradoxes

A. Probability problems

The Monty Hall problem

(Known also as "Monty Hall paradox" and "3-door game")

I start with this classic problem, that is maybe best described in Wikipedia.

It is a probability puzzle based on the American television game show Let's Make a Deal. The name comes from the show's original host, Monty Hall. It is a paradox in that the result appears absurd but is demonstrably true.

The problem was originally posed in a letter by Steve Selvin to the American Statistician in 1975. A well-known statement of the problem was published in Marilyn vos Savant's "Ask Marilyn" column in Parade magazine in 1990.

Imagine that you are a player in a game show and you are presented with three doors. Behind one door is a car. Behind the other doors are goats. You pick a door, let's say Door 1 but the door is not opened yet. Then the host, who knows what's behind the doors, and you also know that, opens another door, let's say Door 2, behind which is a goat. He then asks you, "Do you want to pick Door 3?" Would you change your initial choice?

As one cannot be certain which of the two remaining unopened doors is the winning door, and initially all doors were equally likely, most people assume that each of two remaining closed doors has an equal probability and conclude that switching doesn't change anything. Hence the usual answer is "I stay with my chosen door". However, if you switch door, you double the overall probability of winning the car from 1/3 to 2/3!

There are several approaches to solving the Monty Hall problem, all giving the same result: a player who swaps has a 2/3 chances of winning the car.

Simple solutions

1) Switching loses if and only if the player initially picks the car, which happens with probability 1/3, so switching must win with probability 2/3.

2) Below are three possible arrangements of one car and two goats behind the three doors and the result of switching or staying after initially picking Door 1 in each case:

| Door 1 (chosen) | Door 2 | Door 3 | Result if switching | Result if switching |

|---|---|---|---|---|

| Car | Goat | Goat | Goat | Car |

| Goat | Car | Goat | Car | Goat |

| Goat | Goat | Car | Car | Goat |

3) By opening his door, Monty is actually saying to the player, "There are two doors you did not choose, and the probability that the prize is behind one of them is 2/3. Your choice of door 1 has a chance of 1 in 3 of being the winner. I have not changed that. But by eliminating Door 2, I have shown you that the probability that Door 3 hides the prize is 2 in 3."

4) (Mine) Choosing a door, you know well that this door has 1/3 chances of hiding the car. The remaining 2/3 chances are with the other two doors. Now, after a door is opened by the host, these 2/3 chances don't change, i.e. the third door has still 2/3 chances of hiding the car.

You can play/test the game Let's Make a Deal here (as long as the page is still alive!). You will find out that:

1) If you always stick to the same door, 'win' and 'lose' results are about the same.

2) If you always switch to the other door, 'win' results are much higher than 'lose'!

Variations of the Monty Hall problem

(Re: http://www.archimedes-lab.org/monthly_puzzles_61.html)I continue with some variations of the Monty Hall problem.

There are 3 playing cards on the table, face-down. One of them is Queen of Hearts (QoH). The game "host" asks you to guess which one is it. OK, so you point card C. Then card B is turned over by the "host" and it isn't a QoH, but a non-QoH). So the situation schematically is the following:

| Card A | Card B | Card C |

|---|---|---|

| ? | non-QoH | ? |

At this point, you are asked whether you want to switch to card A or not.

You may think, "I don't have any reason to switch cards became there's a QoH and a non-QoH remaining, so the probability of winning is the same by switching on not, it's 50%."

There are two variations. Let's see what happens in each one.

Variation #1

The "host" knows where the QoH card is. And you know that. In this case, the chance of winning is doubled when you switch to the other card (A) rather than sticking with your original choice (card C), because the "host" turned deliberately an non-QoH card over.

Why's that?

There are 3 possible situations corresponding to your initial choice, each with equal probability (1/3):

1) You originally picked non-QoH #1. The "host" has deliberately shown you non-QoH #2.

2) You originally picked non-QoH #2. The "host" has deliberately shown you non-QoH #1.

3) You originally picked the QoH card. The "host" has shown you either of the two non-QoH cards.

If you choose to switch, you win in the first two cases. If you choose to stay with the initial choice, you will win only in third case. So in 2 out of 3 equally likely cases switching wins, since the odds of winning by switching are 2/3. In other words, a player who has a policy of always switching will win on average two times out of the three.

Variation #2

The "host" doesn’t really know where the QoH card is and you know that. In this case, when the "host" turns the non-QoH card over, the probability that you originally picked the QoH card increases from 1/3 to 1/2. And the odds in this case are equal, whether you change your initial choice or not.

Two envelopes problem

(Re: https://en.wikipedia.org/wiki/Two_envelopes_problem)Also known as the "exchange paradox".

You are given two indistinguishable envelopes and you are informed that one of them contains twice as much money as the other. You may select any one of the envelopes at random and you can receive the money it is in it. Now, after you select one of them and before opening it, you are given the opportunity to take the other envelope instead. Should you switch to the other envelope?

You may think, "I don't have any reason to take the other envelope since the chances that it is the one with more money are the same with the chances of keeping the one I have already selected."

Let's see.

Suppose that the amount of money in the envelope you first choose is x. Then the other envelope has 50% chances of containing 2x and 50% chances of containing x/2, i.e. a total of 0.5*2x + 0.5*(x/2) = x + 0.25x = 1.25x. So you should switch.

This, however, creates the following paradox: If one switches, by the same reasoning one has to switch back! And this can go on in an endless cycle! So there must be something wrong with above reasoning. Indeed, two things are wrong:

1) To mix different instances of a variable in the same formula like this is said to be illegitimate, so the above reasoning is incorrect.

2) The expectation of 1.25x holds for either of the envelopes!

The three coins problem

(Re: http://www.quantdec.com/envstats/notes/class_04/prob_sim.htm)A box contains three coins. One is normal, one has two heads and the other has two tails. One con falls out of the box on the floor, heads up. What is the probability that the other side is also a head?

(Think of the solution as long as needed before going on . . .)

The table below contains all possible cases:

| Coin | Side up | Side down |

|---|---|---|

| 2 heads | head #1 | head #2 |

| 2 heads | head #2 | head #1 |

| 2 tails | tail #1 | tail #2 |

| 2 tails | tail #2 | tail #1 |

| normal | head | tail |

| normal | tail | head |

In the 3 of the above possible coin cases where you get head up, you have 2 cases of head down and one of tail down. So the chances are 2/3.

B. Probability paradoxes

Bertrand's Box paradox

(Re: https://en.wikipedia.org/wiki/Bertrand%27s_box_paradox)(I have simplified the description.)

There are three boxes, each with two drawers. Each drawer contains a coin. One box has a gold coin in each drawer (GG), one box has a silver coin in each drawer (SS) and the other has a gold coin in one drawer and a silver coin in the other (GS). A box is chosen at random, and then one of its drawers is opened. The coin in that drawer is gold. What is the chance that the other drawer also contains a gold coin?

Apparently, it seems that the probability is 50%. However, it isn't! Here's why:

Since a gold coin is found, it means that the box is either GG or GS. These 2 boxes have a total of 3 gold coins and 1 silver coin. If we subtract the gold coin already found, there remain 2 gold coins and 1 silver coin. Hence, the probability that the other coin in the box is also gold is 2/3.

Or, we can think in the following way, examining all three, equally possible cases:

1) Drawer G of GS was chosen, so the other drawer contains a silver coin (1/3)

2) Drawer G1 of GG was chosen, so the other drawer contains also a gold coin (1/3)

3) Drawer G2 of GG was chosen, so the other drawer contains also a gold coin (1/3)

In 2 of the three cases the other coin is also gold, i.e. the probability of this happening is 2/3.

Variations:

Since gold coins are difficult to get and also for getting rid of the boxes, 3 pairs of regular coins may be used, placed on the table and covered, with heads up and tails up as follows: HH, TT and HT. Or 3 pairs of black and red playing cards face down, etc.

St. Petersburg paradox

(Re: https://en.wikipedia.org/wiki/Petersburg_paradox)You pay a fixed fee to enter a game of chance in a casino, in which a fair coin is tossed repeatedly until a tail appears, ending the game. The pot starts at 2 dollars and is doubled every time a head appears. You win whatever is in the pot after the game ends. Thus you win 2 dollars if a tail appears on the first toss, 4 dollars if a head appears on the second toss and a tail on the second, 8 dollars if a head appears on the first two tosses and a tail on the third, and so on. In short, you win 2^k dollars, if the coin is tossed k times until the first tail appears. What would be a fair price to pay the casino for entering the game?

Let's see what's the average payout: with probability 1/2, the player wins 2 dollars, with probability 1/4 the player wins 4 dollars, with probability 1/8 the player wins 8 dollars, and so on. The expected value is thus EV = 1/2*2 + 1/4*4 + 1/8*8 ... = 1 + 1 + 1 + ... = infinite.

Assuming that the game can continue as long as the coin toss results in heads and that the casino has unlimited resources, this sum grows without bound and so the expected win for repeated play is an infinite amount of money.

Considering nothing but the expected value of the net change in one's monetary wealth, one should therefore play the game at any price if offered the opportunity. Yet, in published descriptions of the game, many people expressed disbelief in the result. It has been found that "few people would pay even $25 to enter such a game" and most commentators would agree. The paradox is the discrepancy between what people seem willing to pay to enter the game and the infinite expected value.

I created and ran a simple computer program to find out what is statistically the average earnings after a large number of rounds. First of all, the average number of tosses, until a tail comes up is found to be 2. Of course, since the first toss is "free", i.e. it doesn't count, since one earns 2 dollars independently of the outcome of the first "toss". Then the 2nd toss has 50% chances to be heads or tails. So each round lasts 2 tosses in average.

Now, here's another paradox within St. Petersburg paradox that I discovered: Since the average of tosses is 2, one would logically expect that the average earnings would be 2^2 = 4 dollars. Although this sounds quite logical, it is very far from the truth. Why?

If the amount of dollars earned after each test were proportional, i.e. 2 dollars were added on each toss, then the average earnings would be indeed 4 dollars. But the amount of earnings does not grow proportionallly but exponentially. Indeed, in the above test, I found that the average earings range from 10 to 25 dollars! So the paradox lies in the big discrepancy between the average number of tosses and the average earnings.

Which of the averages then should be taken as a basis to decide on the amount of the entry fee one would be willing to pay for this chance game? Evidenttly, it's the average earings, which is more real as a statistic and it's what finally counts.

Here is the code of the program I used for testing:(Q)BASIC

DEFINT A -Z

DIM tottosses AS LONG, earnings AS LONG, totearnings AS LONG

RANDOMIZE TIMER

tottosses = 0: totearnings = 0: rounds = 32000

FOR i = 1 TO rounds

tosses = 0: earnings = 2

DO

tosses = tosses + 1: a = INT(2 * RND) + 1

IF a = 1 THEN earnings = earnings * 2 ELSE EXIT DO

LOOP

tottosses = tottosses + tosses

totearnings = totearnings + earnings

NEXT i

avtosses = CINT(tottosses / rounds): avearnings = CINT(totearnings / rounds)

PRINT 'Average number of tosses, average earnings:'; avtosses; avearnings

END

|

Python

import random

random.seed()

tottosses = 0; totearnings = 0; rounds = 32000

for i in range(rounds):

tosses = 0; earnings = 2

while True:

tosses += 1; a = random.randint(1, 2)

if a == 1: earnings *= 2

else: break

tottosses += tosses; totearnings += earnings

avtosses = round(float(tottosses)/float(rounds), 0)

avearnings = round(float(totearnings)/float(rounds), 0)

print 'Average number of tosses, average earnings:', avtosses, avearnings

|

If you run it a lot of times, you will see a stable average number of tosses of 2 and an average of earnings, normally lying bewteen 15 and 25 but with big variations each now and then. Don't be surprised if you suddenly see a value that looks like 540!

Necktie paradox

(Re: https://en.wikipedia.org/wiki/Necktie_paradox)It is a variation of the "Two envelopes problem" described above.

Two men are given a necktie by their wives as a Christmas present. Over drinks they start arguing over who has the more expensive necktie. They agree to have a wager over it. They will consult their wives and find out which necktie is more expensive. The terms of the bet are that the man with the more expensive necktie has to give it to the other as the prize.

One of the men reasons as follows: Winning and losing are equally likely. If I lose, I will lose the value of my necktie. But if I win, I win more than the value of my necktie. Therefore the wager is to my advantage.

Assuming that both men think equally logically, the other man will think the same thing. So, paradoxically, it seems that both think they have an advantage in this wager. Which is impossible.

What's the flaw in this?

What we call the "value of my necktie" in the losing scenario is the same amount as what we call "more than the value of my necktie" in the winning scenario. Accordingly, neither man has the advantage in the wager. E.g. If the price of one tie is $10 and the other $20, in the losing scenario one loses $20, which is the same amount he wins in the winning scenario.

Boy or Girl paradox

(Re: https://en.wikipedia.org/wiki/Boy_or_Girl_paradox)Problem #1:

The Jones family have two children. The older child is a girl. What is the probability that both children are girls?

(Think of the solution as long as needed before going on . . .)

Only two possible cases meet the criteria specified in the question: GB and GG. Since both of them are equally likely, and only one of the two, GG, includes two girls, the probability that the younger child is also a girl is 1/2.

***

Problem #2:

The Jones family have two children. At least one of them is a boy. What is the probability that both children are boys?

(Think of the solution as long as needed before going on . . .)

The possible cases are: GB, BG and BB. Schematically:

| Older child | Younger child |

|---|---|

| Girl | Boy |

| Boy | Girl |

| Boy | Boy |

From the above we can see that the probability that both are boys is 1/3.

Variation:

A postman knocks on the door of a house where two children live, and the door is answered by a little girl. What are the chances that the other child is also a girl?

Answer: 1/3

The Birthday paradox

(Re: http://www.scientificamerican.com/article.cfm?id=bring-science-home-probability-birthday-paradox)How many people do you think it would take to survey, on average, to find two people who share the same birthday? Due to probability, sometimes an event is more likely to occur than we believe it to. In this case, if you survey a random group of just 23 people there is actually about a 50% chance that two of them will have the same birthday. This is known as the birthday paradox. Don't believe it's true? You can test it and see mathematical probability in action!

The birthday paradox, also known as the birthday problem, states that in a random group of 23 people, there is about a 50% chance that two people have the same birthday. Is this really true? There are multiple reasons why this seems like a paradox. One is that when in a room with 22 other people, if a person compares his or her birthday with the birthdays of the other people it would make for only 22 comparisons — only 22 chances for people to share the same birthday.

But when all 23 birthdays are compared against each other, it makes for much more than 22 comparisons. How much more? Well, the first person has 22 comparisons to make, but the second person was already compared to the first person, so there are only 21 comparisons to make. The third person then has 20 comparisons, the fourth person has 19 and so on. If you add up all possible comparisons (22 + 21 + 20 + 19 + … +1) the sum is 253 comparisons, or combinations. Consequently, each group of 23 people involves 253 comparisons, or 253 chances for matching birthdays.

Streak of heads paradox

Here is a case that I am sure a lot of people have been confused about or at least thought as a kind of paradox.

We toss a fair coin and get heads up. We toss again and get heads up. As this goes on, everytime we feel more and more strongly that its time for tails to show up. That is, there are more and more chances each time for tails to show up. We have reached a large number of tosses, and we are almost certain that the next toss will be tails up.

Coin tossing may be replaced with drawing a card from a well shuffled deck and getting a red card for a number of consecutive drawings, betting on Red in a roulette, etc.

That at it's time for tails to show up and the accumulated frustration of this not happening is a real feeling we get. We think, "What is the probability that heads continue to show up after, e.g. 9 consecutive times of heads up?" Apparently very low, right? "That would be too much", we could think.

But let's cool down and think in pure probability terms.

There is a basic, fundamental law that applies at any time we are tossing a coin, drawing a card from the deck, betting on Red in a roulette, etc. The probability for heads or a red to come up is 1/2. Similarly of course for tails up or a black card. Always. At any time. Independenty of what has happened until then. The Universe does not keep records. We do. And these records help us producing statistics, etc.

Now, let's look the tossing problem from another view. We could do an experiment and say, "We are going to toss a coin 10 times. What is the probability all outcomes to be heads up?" Easy: It's 1/(2^10), i.e. 1/1024 = ~0.1%. And what is the probability to get at least one tails up? It's 9 times greater, i. ~0.9%. So we note down each time the outcome of a tossing. Then we may think that since at least one tails up is 9 times more probable than 10 heads up, it's 9 times more probable that the 10th toss is tails up. And we may believe this strongly.

Well, if we come back to the fundamental law described above, we had to come down to earth and accept that the probability of a 10th heads up is the same with the probability of a 10th tails up, i.e. 50%. That is, getting HHHHHHHHHH is the same as HHHHHHHHHT.

OK, but what about the 9:1 probability as a statistic? Does HHHHHHHHHH invalidates it? No, because it's an extreme case and statistical formulas always provide for deviations from normal. If we were to repeat the experiment foa a large number of times (e.g. 100 times), we would see that on average outcomes are always as expected. And We could also confirm the 9:1 case.

C. Other paradoxes

Crocodile dilemma

(Re: https://en.wikipedia.org/wiki/Crocodile_dilemma)(It is also known as "cocodile paradox". Although its description involves a totally unrealistic situation, as you will see, and it can be described in a hundred of more realistic ways, I use the original version, as it appears in Wikipedia.)

The Crocodile dilemma is an unsolvable problem in logic. The premise states that a crocodile who has stolen a child promises the father that his son will be returned if and only if he can correctly predict what the crocodile will do.

The outcome is logically smooth (but unpredictable) if the father guesses that the child will be returned, but a dilemma arises for the crocodile if he guesses that the child will not be returned: 1)If the crocodile decides to keep the child, he will violate his terms: the father's prediction has been validated, and the child should be returned. 2) If the crocodile decides to give back the child, he still violates his terms, even if this decision is based on the previous result: the father's prediction has been falsified, and the child should not be returned.

Therefore, the question of what the crocodile should do is paradoxical, and there is no justifiable solution.

Relation to "The king and the jester" riddle

The king, who was tired of his jester and looked for an excuse to get rid of him, calls him in one day and says to him: "Say something, anything you want. If what you say is a lie I will hang you and if it is true I will slaughter you."

The jester thought for a while and then he said something to the king. And lived!

What did he say?

(Hover the mouse pointer over here to see the solution.)

The card paradox

(Re: https://en.wikipedia.org/wiki/Card_paradox)Suppose there is a card with statements printed on both sides:

Front: The statement on the other side of this card is TRUE.

Back: The statement on the other side of this card is FALSE.

Trying to assign a truth value to either of them leads to a paradox.

Lying paradoxes

1. Epimenides was a Cretan who made an immortal statement: "All Cretans are liars"

2. "This sentence is false"

3. "I am lying"

4. What happens if Pinocchio says that his nose is about to grow, knowing that it actually grows ONLY when he is telling a lie?

The treachery of images

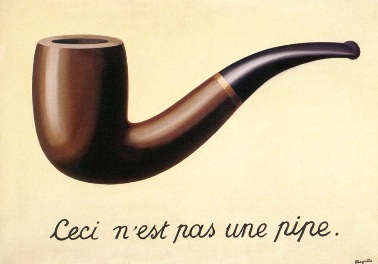

(Re: https://en.wikipedia.org/wiki/The_Treachery_Of_Images)| A painting by the Belgian René Magritte, featuring a smoking pipe and a message at the bottom saying "Ceci n'est pas une pipe." ("This is not a pipe.") Indeed, the painting is not a pipe, but rather an image of a pipe! |  |

Socratic paradox

Socrates' famous phrase: "I know one thing, that I know nothing"

Unexpected test paradox

At start of the week, a teacher announces to his students that he will give a test this week.

Now, the students think that locically the test cannot be given on Friday because it would be expected since it is the last remaining day. So the teacher would not give the test on Friday. In the same way, it could not give it on Thursday, because it would be expected since it is the last remaining day, excluding of course Friday. And so on ... So, the teacher cannot give an unexpected test!

Wrong! The teacher may well give the test on Friday, and it will be unexpected, since the students, based on the above reasoning, would not expect it on Friday!

Actually, he can give the test any day, since, based on the above reasoning, the students believe it cannot be unexpected anyway!

The Sorites' paradox

Consider a heap of sand from which grains are individually removed. One might well do the following thinking: 1) 1,000,000 grains of sand is a heap of sand. 2) A heap of sand minus one grain is still a heap. 3) Repeated applications of (2) (each time starting with one less grain), eventually forces one to accept the conclusion that a heap may be composed of just one grain of sand.

The paradox of the court

(From Smullyan's Puzzle Book)

This is a very old problem in logic stemming from ancient Greece. It is said that the famous sophist Protagoras would accept a young person Euathlus as pupil on credit and that hw will get paid for his instruction after he had won his first case. So, Protagoras waited until Euathlus take on a client, but this never happened. So he decided to sue Euathlus for the amount owed.

Protagoras argued that if he won the case he would be paid his money. If Euathlus won the case, Protagoras would still be paid according to the original contract, because Euathlus would have won his first case.

Euathlus, however, claimed that if he won then by the court's decision he would not have to pay Protagoras. If on the other hand Protagoras won then Euathlus would still not have won a case and therefore not be obliged to pay. The question is: which of the two men is in the right?

Answer: The court decision will be based on what has aleready happened, not on what will happen after the court decision is made. So, actually, there's no case at all, since Euathlus has not yet tried to win a case!

Now, here's another thing that Protagoras could do: He should let Euathlus win the case and then sue him again, in which case he should legally get his money since Euathlus would have won his first case!

(This seems a better and more useful math problem. But then, it wouldn't be a paradox!

Always-winning roulette method

This is something I have thought in 2004 and it can be seen as simply a problem (successful or defective) method or a paradox.

You bet one chip on Red. If Red comes out, you keep the earned chip and you bet another chip, always on Red. If Black comes out, you bet 2 chips on Red in the next round. If Red comes out, you get 4 chips back, so have earned one more chip (after subtracting the 3 chips you have bet in total). You keep the earned chip and you bet another chip, always on Red. In short, the method can be summarized as follows: "Whenever you win, you keep the earned chip and whenever you loose you double the amount of the previous bet." In this way, you always earn one chip!

Can this be possible? Can this method work on a standard basis?

Let's see.

I have test this by playing roulette games (online and offline) and also with a program I created that produced statistics. Well, After about 5 minutes (i.e. about 100 roulette rounds), I always was over my initial aount (capital). (To this, one has to add the extra 1/37 changes in each round in which both Red and Black lose. But it's too small a probability to affect the results.) So, it looks like it's successful method, insn't it?

However! (There's always a "but" in these cases!:)

1. If this worked, casinos would have closed down! True, but this doesn't say were the problem is, i.e. what is the factor or factors that make this method non-succcessful.

2. There are betting limitations, different in ach casino (which you can see near the roulette table). The most important of them in our case is maximum bet. The lower this limit is the more chances are that you may loose a lot of chips because you couldn't double the bet anymore! (BTW, it is assumed that there's limit of the amount of money you can bet.)

3. Each roulette round lasts, what?, 5 minutes (from that start of bettings to the stop of the ball on the roulette wheel. Earning one chip each five mminutes is quite tiresome ... 6 chips in about one hour!

4. You will be eventually thrown out of the casino!

5. At a certain point of an unlucky streak, the betting sum will be too large compared to the expected gain (one chip).

6. Finally, the probability of going bankrupt, as small as this may be, compared to the small amount of money you can earn, is quite discouraging.

But you can always do that for fun ...

D. Short, funny paradoxes

What is better than eternal bliss? Nothing. But a slice of bread is better than nothing. So a slice of bread is better than eternal bliss.

Your mission is not to accept the mission. Do you accept?

(Variation: Your order is not to execute the order. Would you execute it?)

Answer truthfully ("Yes" or "No"): Will the next word you say be "No"?

If the temperature this morning is 0 degrees and the Weather Channel says, "It will be twice as cold tomorrow", what will the temperature be?

(This is very interesting ... Even with -2 degrees ... -4 degrees does not feel twice as cold, it's only slightly colder.)

Everyday life statements:

• Nobody goes to that restaurant; it's too crowded.

• Don't go near the water 'til you have learned how to swim.

• The man who wrote such a stupid sentence can not write at all.

Contact:

I will be glad to receive your comments and suggestions at a_pis@otenet.gr